Running Llama 3.1 Locally: A Beginner's Guide

Llama 3.1 is an exciting new open-source Large Language Model (LLM) developed by Meta. Running it locally on your own computer has several advantages:

- Privacy: Your data stays on your hardware.

- Offline Access: No need for an internet connection.

- Cost-Effective: No API fees.

- Control: You have full control over the model.

A Simple Guide to Get You Started with Running Llama 3.1 Locally Using a Tool Called GPT4ALL

Step 1: Install GPT4ALL

- GPT4ALL is a user-friendly application that allows you to run various LLMs, including Llama 3.1, on your computer.

- Visit the GPT4ALL website and download the installer for your operating system (Windows, Mac, or Linux).

- Run the installer and follow the on-screen instructions to complete the installation.

Step 2: Launch GPT4ALL

- Once installed, open the GPT4ALL application.

- You’ll see a welcome message explaining how to use the software.

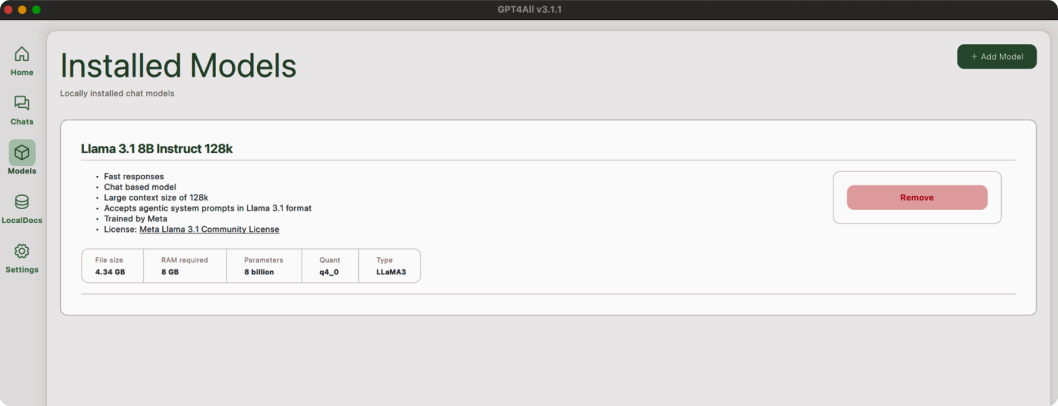

Step 3: Download the Llama 3.1 Model

- In GPT4ALL, navigate to the model selection area.

- Look for the Llama 3.1 model in the list of available models.

- Click on the download button next to the Llama 3.1 model.

- Wait for the download to complete. The model file can be quite large, so this may take some time depending on your internet speed.

Step 4: Select the Llama 3.1 Model

- Once downloaded, select the Llama 3.1 model from your list of available models in GPT4ALL.

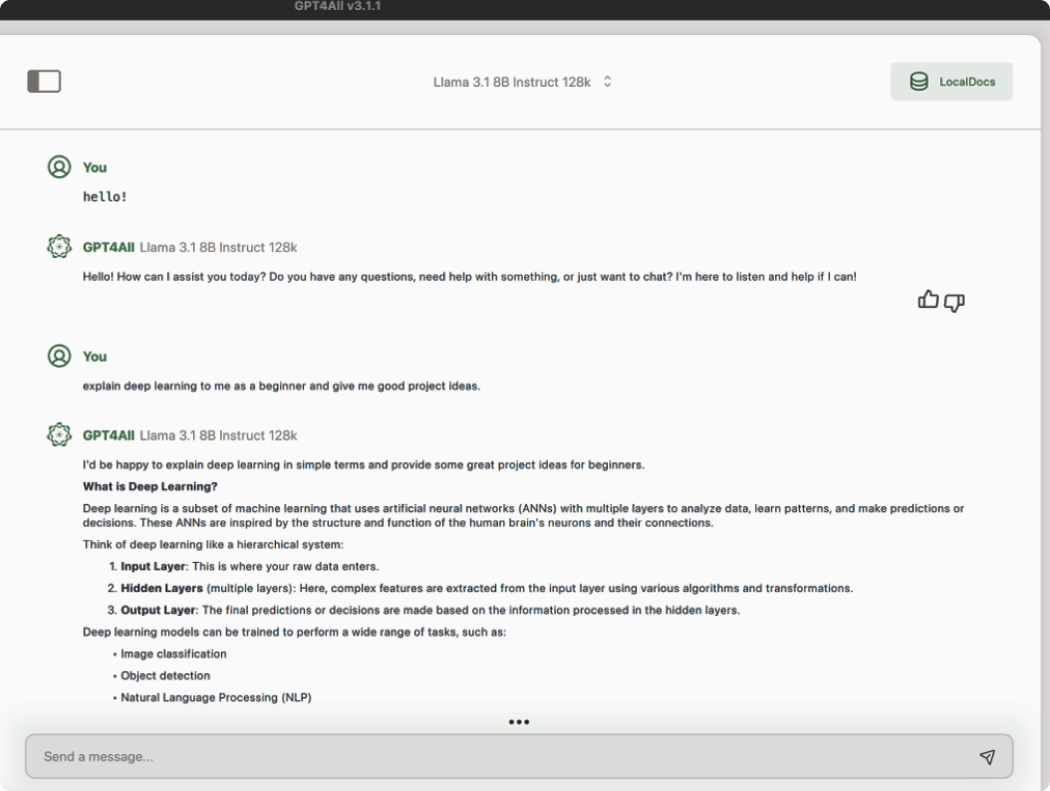

Step 5: Start Using Llama 3.1

- You can now start interacting with Llama 3.1 through the GPT4ALL interface.

- Type your questions or prompts into the text box and see the model’s responses.

Additional Features

GPT4ALL also allows you to use local documents with the model (click on LocalDocs). This means you can upload your own text files and have the model analyze or answer questions about them.

Tips for Beginners

- Start with simple queries to get a feel for how the model responds.

- Experiment with different types of questions or tasks to understand the model’s capabilities.

- Remember that while powerful, Llama 3.1 (like all AI models) can sometimes make mistakes or provide inaccurate information.

- Be patient if the model takes a moment to respond, especially for complex queries.

By following these steps, you’ll be able to run Llama 3.1 on your local machine, giving you a hands-on experience with one of the latest open-source LLMs. This approach allows you to explore the capabilities of advanced AI language models while maintaining control over your data and usage.