Introduction to the Core Concepts and Features of RAG

Large Language Models (LLMs) are great, but we can do better. Retrieval Augmented Generation (RAG) is an advanced AI technique that enhances LLMs by integrating external knowledge sources. Unlike traditional LLMs, which rely solely on their training data, RAG models use a combination of embeddings and vector databases to retrieve relevant information from external documents, thereby improving the accuracy and relevance of the generated content. This dual approach enables RAG models to access vast amounts of data, providing more informed and contextually rich responses.

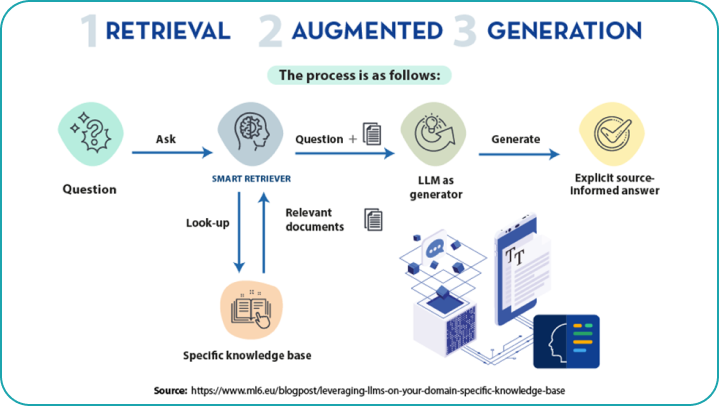

How RAG Works

RAG operates through a two-step process: retrieval and generation. First, the model generates a query based on the input it receives. This query is then used to search a vector database that contains indexed documents represented by embeddings. These embeddings are numerical representations of text that capture semantic meaning, allowing for efficient and accurate retrieval of relevant documents. Once the most relevant documents are identified, they are used as additional context for the generation phase, where the LLM produces a response that incorporates the retrieved information.

Potential Drawbacks of RAG

Despite its advantages, RAG has potential drawbacks. The reliance on external databases means the quality and reliability of the generated content are contingent on the accuracy and relevance of the retrieved documents. There is also an increased computational cost associated with the retrieval process, which can impact the model’s performance and response time. Moreover, ensuring the security and privacy of sensitive information within the databases is crucial, as breaches could lead to misuse of data.

Conclusion

Retrieval Augmented Generation represents a significant advancement in AI communication, offering enhanced capabilities for LLMs by integrating external knowledge sources. Understanding its core features, applications, and potential drawbacks enables businesses to leverage RAG to improve efficiency, accuracy, and innovation in their operations. As AI continues to evolve, RAG stands out as a transformative technology for developing more powerful and contextually aware language models.